Chatbot LLM: Install Open Source on Windows Easy

In this blog, I'll walk you through building and deploying your personalized chatbot using an open-source language model (LLM) called Open LLaMA. We will start by installing everything on a Windows environment and then deploy it with Ngrok for remote access.

In this blog, I'll walk you through building and deploying your personalized chatbot using an open-source language model (LLM) called Open LLaMA. We will start by installing everything on a Windows environment and then deploy it with Ngrok for remote access.

To follow along, you can refer to my [GitHub repository](https://github.com/chelvadataer/personalized-bot.git), which contains all the code and resources.

Prerequisites

Before we dive in, ensure you have the following installed:

- Python 3.7 or above

- [Git](https://git-scm.com/download/win)

- [Ngrok](https://ngrok.com/download) (for public URL to local development)

- [Ollama](https://ollama.com) installed

If you don't have these installed, make sure to install them first. Let's proceed with installing the necessary Python libraries and setting up the chatbot.

Step 1: Cloning the GitHub Repository

The first step is to clone the repository that contains our chatbot code:

---------------------------------------------------------------------------------------

Clone the repository

git clone https://github.com/chelvadataer/personalized-bot.git

cd personalized-bot

------------------------------------------------------------------------------------------

This repository contains a script that uses [Streamlit](https://streamlit.io) to create the chatbot's UI and Ollama to generate responses using the LLaMA model.

Step 2: Setting Up Your Environment

We will now set up a Python virtual environment and install the required libraries. Run the following commands in your terminal:

-----------------------------------------------------------------------------------------------------------

Create a virtual environment

python -m venv chatbot-env

Activate the virtual environment

For Windows

chatbot-env\Scripts\activate

Install required dependencies

pip install -r requirements.txt

-------------------------------------------------------------------------------------------------------------

This will install all the dependencies listed in the `requirements.txt` file, including Streamlit.

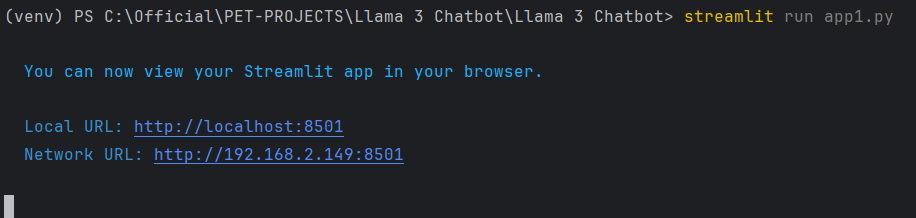

Step 3: Running the Chatbot Locally

Now that the environment is set up, we can run our chatbot locally. The script in the repository is written in Python and uses Streamlit to create a simple web interface. To run the chatbot, use the following command:

------------------------------------------------------------------------------------------------------

streamlit run chatbot.py

-----------------------------------------------------------------------------------------------------

Python Script Overview

Here's an overview of the Python script you can find in the repository:

python Code

import streamlit as st

import subprocess

# Define the function to interact with the Ollama model using subprocess

def get_ollama_response(prompt):

try:

# Run the 'llama3:latest' model and pass the prompt through stdin

result = subprocess.run(

['ollama', 'run', 'llama3:latest'],

input=prompt,

text=True,

capture_output=True,

encoding='utf-8', # Force utf-8 encoding

errors='ignore' # Ignore characters that can't be decoded

)

return result.stdout.strip()

except subprocess.CalledProcessError as e:

return f"Error: {str(e)}"

except Exception as e:

return f"Error: {str(e)}"

# Streamlit web interface

# Add logo to the app (Replace 'logo.png' with your actual logo file)

st.image("logo.svg", width=100) # Adjust width as per your needs

# Heading and tagline

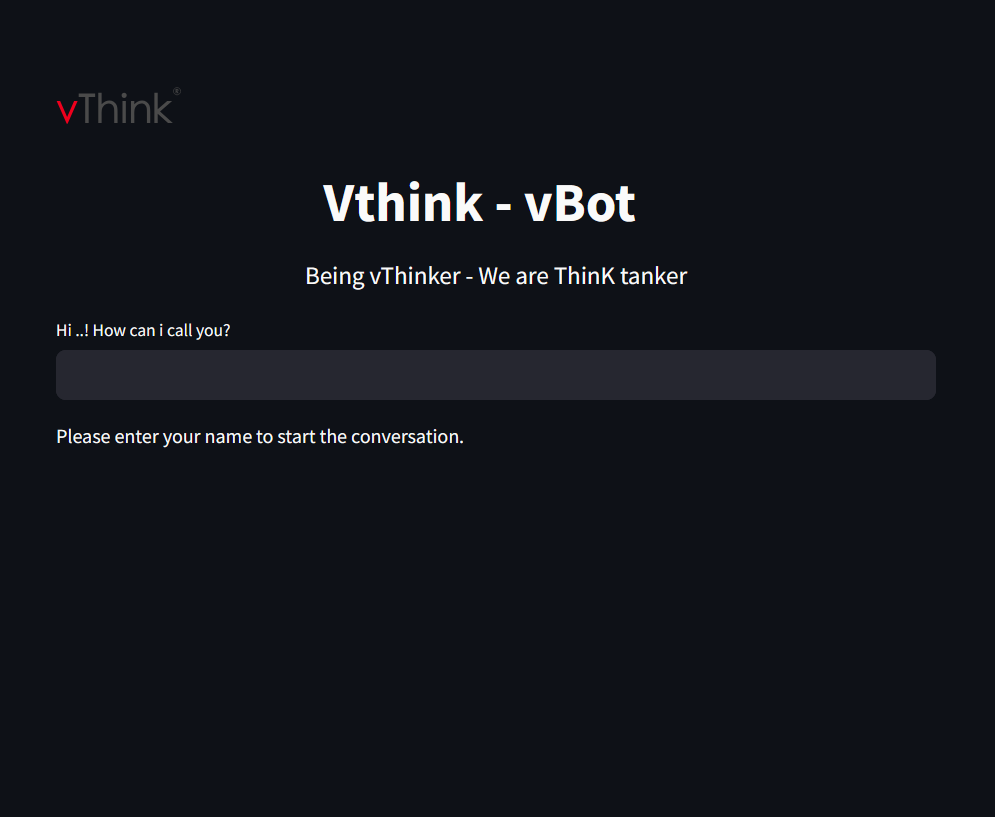

st.markdown("<h1 style='text-align: center;'>Vthink - vBot</h1>", unsafe_allow_html=True)

st.markdown("<p style='text-align: center; font-size:20px;'>Being vThinker - We are ThinK tanker</p>", unsafe_allow_html=True)

# Initialize session state for name and chat history

if "name" not in st.session_state:

st.session_state["name"] = ""

if "chat_history" not in st.session_state:

st.session_state["chat_history"] = []

# Ask for user's name if not provided

if not st.session_state["name"]:

st.session_state["name"] = st.text_input("Hi ..! How can i call you?", key="name_input")

# If we have the user's name, greet them

if st.session_state["name"]:

if len(st.session_state["chat_history"]) == 0:

# Greet the user with positive words before the first conversation

st.markdown(f"Hello, {st.session_state['name']}! 🌟 You're awesome, and I hope we have a great conversation today!")

# Input for user's question

user_input = st.text_input(f"What would you like to ask today, {st.session_state['name']}?", "", key="user_input")

# If there's user input, get the response from Ollama and update the chat history

if user_input and st.button("Send"):

ollama_response = get_ollama_response(user_input)

st.session_state.chat_history.append({"user": user_input, "bot": ollama_response})

# Display the chat history interactively

for i, chat in enumerate(st.session_state.chat_history):

st.markdown(f"**{st.session_state['name']}**: {chat['user']}")

# Display the bot's response in a text area for easy copying

st.text_area(f"Vthink_Bot Response {i + 1}", chat['bot'], height=100, key=f"bot_response_{i}")

# Option to clear chat history

if st.button("Clear Chat"):

st.session_state["chat_history"] = []

else:

st.write("Please enter your name to start the conversation.")

The script takes user input, sends it to the Ollama model via subprocess, and then displays the generated response on the web interface.

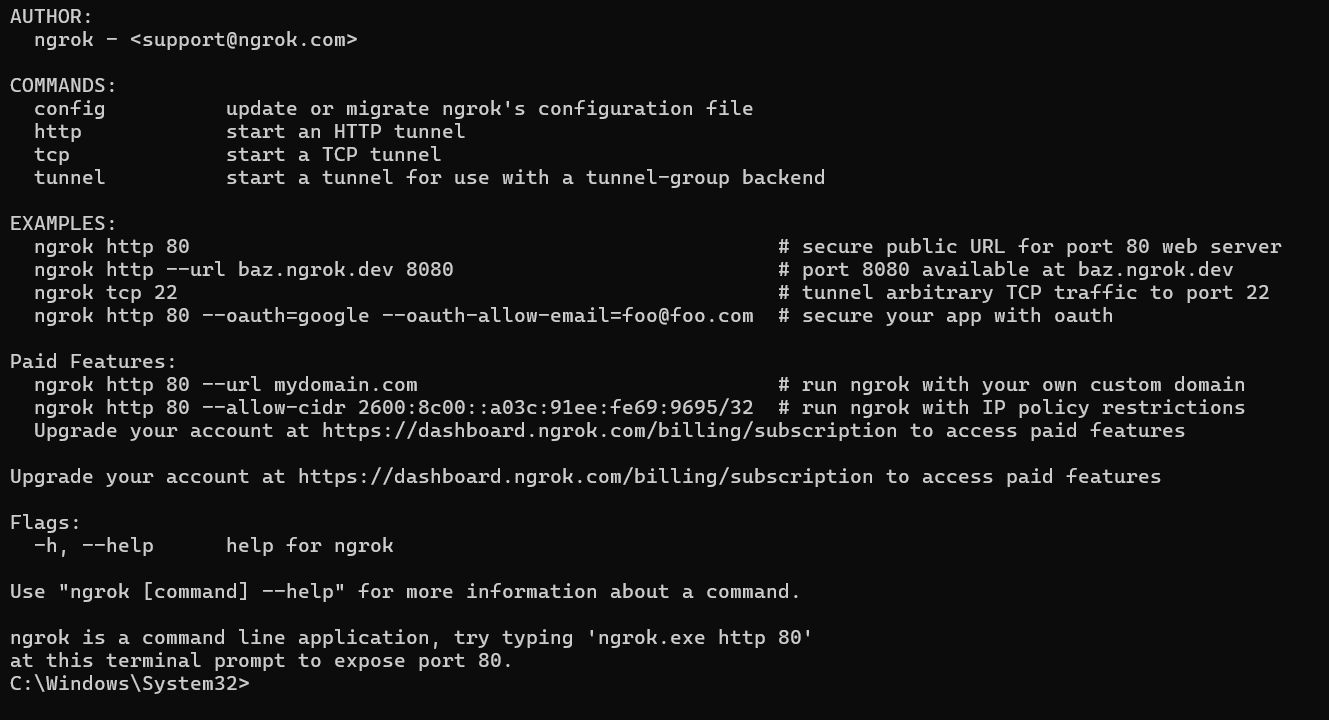

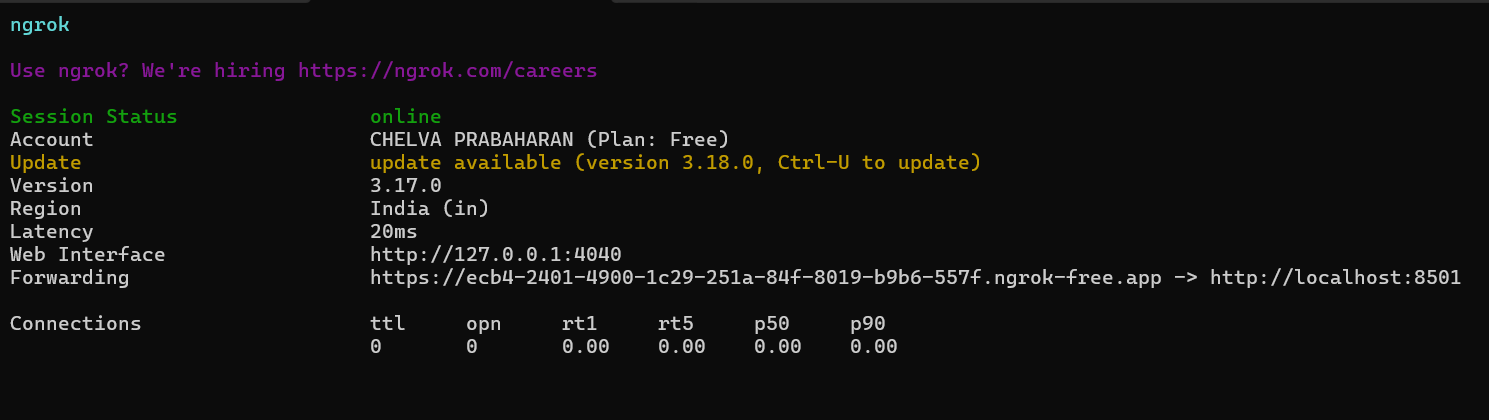

Step 4: Exposing Your Chatbot to the Internet Using Ngrok

Streamlit runs locally by default, which means it is only accessible from your machine. To make the chatbot accessible remotely, we'll use Ngrok.

Downloading and Configuring Ngrok

If you haven’t already done so, download [Ngrok](https://ngrok.com/download). Once downloaded, follow these steps:

- Start Ngrok to expose the local Streamlit server to the public internet.

--------------------------------------------------------------------------------------------------

# Start ngrok to create a public URL for your Streamlit app

ngrok http 8501

--------------------------------------------------------------------------------------------------

- You will see a forwarding address that looks something like `http://abcd1234.ngrok.io`. You can use this URL to access your chatbot from anywhere in the world.

PS C:\Users\Chelvaprabaharan> ngrok http 8501

Step 5: Testing Your Chatbot

Now that Ngrok is running, you can share the forwarding URL with your friends or use it to test the chatbot from a different device. The chatbot UI should be intuitive, with a friendly design and a welcoming greeting message for users.

Adding Images and Branding

For a more professional-looking chatbot, consider adding some images and branding. Replace the placeholder logo.svg with your actual company logo or an image of your choice.

Python

--------------------------------------------------------------------------------------------------------------

# Add logo to the app

st.image("logo.svg", width=100)

-------------------------------------------------------------------------------------------------------------

You can also modify the chatbot's colors and layout by using the various parameters provided by Streamlit's UI components.

Conclusion

In this blog, we've learned how to set up and run a personalized chatbot using Open LLaMA, with a user-friendly UI built with Streamlit and deployment using Ngrok. This project is great for those who want to build an interactive chatbot and make it accessible from anywhere. Feel free to modify and improve it further!

For the full code, additional images, and examples, visit my [GitHub repository](https://github.com/chelvadataer/personalized-bot.git).

Happy coding!

Next Steps

- Try personalizing the chatbot to respond in different styles.

- Add more features, such as voice input or integrating a knowledge base.

- Deploy it on a cloud server to make it more robust.

What do you think about these additions? Let me know if you need any help with extending the chatbot!

Looking to leverage cutting-edge AI technology for your business?

At vThink Global Technologies, we specialize in creating customized AI-powered solutions like chatbots, language models, and data-driven applications. Contact us today for a free consultation and see how we can help you stay ahead in the AI revolution.